Moving my personal infrastructure to Kubernetes (single-node k3s)

Table of Contents

I recently moved my personal infrastructure from a few VMs managed by Ansible to a single-node Kubernetes cluster. I will cover the hardware part in the next post. First, let me explain why k8s.

How I got there #

The way I manage my personal infrastructure has evolved over the years. I started on my Raspberry Pi and then got my first VPS 10 years ago (link to my French blog). I started hosting more things, like my French WordPress blogs, ownCloud, etc. I started developing a passion for hosting providers and I tested dozens of them, moving from VPS to VPS and baremetal servers.

I was managing things by hand until I got my first job in 2017 and discovered Infrastructure as Code with Chef and then Ansible. That year I started hosting my mastodon instance which is when I started needing more power, as I quickly got to about 20k registered users. I also started hosting public services, most of which are retired now as I quickly discovered it was not sustainable for me, nor financially, nor in terms of time to manage all of this.

In 2018 I really started getting into Docker and started doing my own images as well. I was managing my services manually through docker-compose.

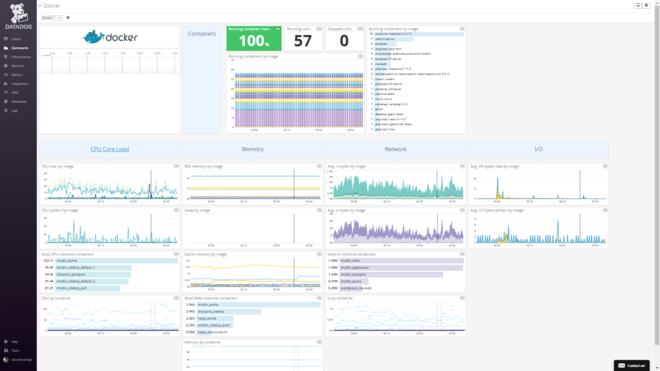

I found this old screenshot of Datadog. At one point, I had 57 containers managed manually!

Then I fell in love with LXC and LXD, LXC being the barebone Linux containers and LXD being a wrapper with a daemon that makes it much easier to manage the images, the network, the filesystem, etc. I drafted a post years ago to explain my setup but never got around to publishing it. Today, LXD has been forked to Incus.

Since my first Raspberry Pi in 2014, I have always been using Debian on my servers. It’s simple and rock solid, and the slow release cycle suits me well. On the client side, things were different though! I have been running macOS for a while, but before that, I was a big distro hopper, and I loved Arch Linux and NixOS. Anyway, the only time I didn’t use Debian was for LXD, because Canonical distributed it via Snap and the whole experience was just smoother with ZFS as well.

root@lxd ~# lxc ls

+---------------+--------------+-------------------------+-----------------------------------------------+-----------+-----------+

| NAME | STATE | IPV4 | IPV6 | TYPE | SNAPSHOTS |

+---------------+--------------+-------------------------+-----------------------------------------------+-----------+-----------+

| caddy | RUNNING | 10.80.245.117 (eth0) | fd42:c932:3155:b7e7:216:3eff:feaf:7a49 (eth0) | CONTAINER | 0 |

+---------------+--------------+-------------------------+-----------------------------------------------+-----------+-----------+

| elasticsearch | RUNNING | 10.80.245.130 (eth0) | fd42:c932:3155:b7e7:216:3eff:fe79:33a0 (eth0) | CONTAINER | 0 |

+---------------+--------------+-------------------------+-----------------------------------------------+-----------+-----------+

| freshrss | RUNNING | 10.80.245.29 (eth0) | fd42:c932:3155:b7e7:216:3eff:fedb:f29d (eth0) | CONTAINER | 0 |

+---------------+--------------+-------------------------+-----------------------------------------------+-----------+-----------+

| ghost | RUNNING | 10.80.245.236 (eth0) | fd42:c932:3155:b7e7:216:3eff:fec3:bb24 (eth0) | CONTAINER | 0 |

+---------------+--------------+-------------------------+-----------------------------------------------+-----------+-----------+

| isso | RUNNING | 10.80.245.203 (eth0) | fd42:c932:3155:b7e7:216:3eff:fe4c:335b (eth0) | CONTAINER | 0 |

+---------------+--------------+-------------------------+-----------------------------------------------+-----------+-----------+

| kpop | RUNNING | 10.80.245.45 (eth0) | fd42:c932:3155:b7e7:216:3eff:fe39:db60 (eth0) | CONTAINER | 0 |

+---------------+--------------+-------------------------+-----------------------------------------------+-----------+-----------+

| masto | RUNNING | 10.80.245.66 (eth0) | fd42:c932:3155:b7e7:216:3eff:fea1:6fa8 (eth0) | CONTAINER | 0 |

+---------------+--------------+-------------------------+-----------------------------------------------+-----------+-----------+

| matomo | RUNNING | 10.80.245.250 (eth0) | fd42:c932:3155:b7e7:216:3eff:fe4f:65e1 (eth0) | CONTAINER | 0 |

+---------------+--------------+-------------------------+-----------------------------------------------+-----------+-----------+

| mstdn | RUNNING | 10.80.245.48 (eth0) | fd42:c932:3155:b7e7:216:3eff:fef8:715d (eth0) | CONTAINER | 0 |

+---------------+--------------+-------------------------+-----------------------------------------------+-----------+-----------+

| mysql | RUNNING | 10.80.245.19 (eth0) | fd42:c932:3155:b7e7:216:3eff:febe:aae5 (eth0) | CONTAINER | 0 |

+---------------+--------------+-------------------------+-----------------------------------------------+-----------+-----------+

| postgresql | RUNNING | 10.80.245.87 (eth0) | fd42:c932:3155:b7e7:216:3eff:fe38:fcc1 (eth0) | CONTAINER | 0 |

+---------------+--------------+-------------------------+-----------------------------------------------+-----------+-----------+

| privatebin | RUNNING | 10.80.245.86 (eth0) | fd42:c932:3155:b7e7:216:3eff:fe07:f0a1 (eth0) | CONTAINER | 0 |

+---------------+--------------+-------------------------+-----------------------------------------------+-----------+-----------+

| shaarli | RUNNING | 10.80.245.54 (eth0) | fd42:c932:3155:b7e7:216:3eff:fee5:f192 (eth0) | CONTAINER | 0 |

+---------------+--------------+-------------------------+-----------------------------------------------+-----------+-----------+

| wordpress | RUNNING | 10.80.245.15 (eth0) | fd42:c932:3155:b7e7:216:3eff:fe88:9998 (eth0) | CONTAINER | 0 |

+---------------+--------------+-------------------------+-----------------------------------------------+-----------+-----------+

At that point, I had found my love at Hetzner Cloud and everything was running on a big VM with 16 GB of RAM. I went all-in on Ansible and created around 40 roles which I still use to this day!

I got very into ZFS with all its amazing features although, amusingly, it caused me to lose data. I was using ZFS to run my Docker containers and then my LXD containers, mainly as a way to reduce storage usage with Copy on Write and compression. Compression is really amazing, especially for databases!

Since ZFS is closely linked to FreeBSD, I also started getting into FreeBSD, although I never actually moved to it as it’s much more tedious to run stuff on it. The world uses Linux, there is no point in going against the flow. I dropped ZFS on my servers since my data loss story, although I’m still using it for my home server with Proxmox, but this time with two proper physical SSDs.

I think I ditched LXD a few years ago when I started working at a Cloud provider and had access to a bit more infrastructure to host my things. I switched to running things into a few VMs instead, to make things simpler. This wasn’t a dramatic change as I was basically running my LXD server as a hypervisor with small VMs.

I kept this setup for the last 5 years, scattered around in different VMs and cloud providers. I reduced the number of services I host, the last one I removed being the Tor relay as I no longer feel comfortable hosting it.

For various reasons, I recently needed to move some of my workloads somewhere else, so the question of my infrastructure came up again. Actually, two questions: where do I host my stuff (next article), second, how do I manage it.

Ansible is fine for server stuff like the OS or the “server” dependencies such as databases, reverse proxies, and so on. But for apps, I never fully managed them with Ansible. I only manage the OS dependencies and the systemd units as well as the reverse proxy configs. But the app code itself, the config, etc. is not managed. I also have to handle the updates myself, which for some cases such as Miniflux which packages an APT repo is super easy, for some others like Mastodon I have to git checkout the new version, compile or install the new Ruby and Node version, install the dependencies, compile the assets, do the database migrations, etc. It’s still a pretty manual process. Of course, I could write scripts or ansible playbooks for that, but for some reason, I never really wanted to do it.

Back to Docker? #

Now, some of these pains would be alleviated by using Docker. I started considering going back to Docker. First, using docker-compose, like I did years ago. The issue with that approach is that it’s still, although much less, manual. For example, I would still have to edit my compose files manually, run the updates manually, etc.

My ideal setup would be a fully declarative approach in a single git repository, and apply everything on push. There’s probably a way to achieve this by duct-taping a few scripts and CI, but I didn’t want to go that route.

That kind of workflow (also known as GitOps), doesn’t seem very used outside of Kubernetes, even with Docker Swarm. The only thing I could find was a Portainer feature that uses webhooks to trigger deployments. This could work, but I was surprised to not find anything else. That seems like a missed opportunity.

Thus, I started experimenting with k8s.

Why Kubernetes #

The good thing about Kubernetes is that you can do everything declaratively. Paired with a GitOps approach, which I’ll detail more in another post, it means that there is no risk of manual things being done that are not defined in YAML, in the git repo. This makes it easier to set up and maintain everything.

You could argue that you achieve the same with Ansible, which is true, although it requires a bit of work. The thing is that with Ansible, I’m quite dependent on the host operating system. I need to install the various runtimes and OS dependencies, etc. If the OS (in my case, Debian) doesn’t provide the correct version that I need, then I need to use custom APT repositories, etc. That makes it harder as well to do major OS upgrades.

With Kubernetes and containers in general, I can have containers running Postgres 14 or 17, Node 12 or 22, etc. It’s much easier to manage.

In terms of applications, as explained earlier, applications which have a runtime (ruby, node, etc.) require some work to be installed and kept up-to-date. The containers ship with the runtime and dependencies, so the problem is solved. (It doesn’t mean that container images shouldn’t be updated though 😄).

With Kubernetes, I can completely manage my applications and their OS dependencies such as databases, declaratively.

It’s very common to hear that Kubernetes is a nightmare, that it’s very complex. It might be, internally. It might also be, at scale. Fortunately, at work, we use AWS EKS and we have a team of engineers taking care of it, for example, but it seems to be not that bad.

However, for personal infrastructure, it can be too complex, but it doesn’t have to be. You don’t need to use many nodes, complex network setups for high availability, or complex volume setups, etc.

To be honest, I would be happy with a less complex solution than k8s and all its moving parts. I think having a single baremetal node reduces that complexity quite a lot, but I would be happy with Docker Swarm, probably.

Funnily enough, I stumbled upon this comment on Hacker News recently:

You can run single-node k3s on a VM with 512MB of RAM and deploy your app with a hundred lines of JSON, and it inherits a ton of useful features that are managed in one place and can grow with your app if/as needed. These discussions always go in circles between Haters and Advocates:

- H: “kubernetes [at planetary scale] is too complex”

- A: “you can run it on a toaster and it’s simpler to reason about than systemd + pile of bash scripts”

- H: “what’s the point of single node kubernetes? I’ll just SSH in and paste my bash script and call it a day”

- A: “but how do you scale/maintain that?”

- H: “who needs that scale?”

To some extent, I do agree it’s easier to reason about! Maybe not for standalone single-binary deployments, but when you need multiple components working together, a true declarative approach works wonders.

For example, here is my config for umami:

➜ flux git:(main) tree apps/umami

apps/umami

├── cnpg

│ ├── backup.yaml

│ ├── cluster.yaml

│ ├── kustomization.yaml

│ └── secret-s3.yaml

├── deployment.yaml

├── ingress.yaml

├── kustomization.yaml

├── namespace.yaml

└── service.yaml

2 directories, 9 files

Instead of having multiple ansible roles to:

- install node

- install postgres

- create databases, users

- install umami

- create user

- create systemd service

- install dependencies

- build next.js app

- install the reverse proxy

- setup the reverse proxy

I just have a few YAML files that contain the desired state. Instead, Ansible looks more like bash scripts as YAML, as in it’s a hybrid between imperative and declarative, in my opinion.

The abstractions provided by k8s are simple and easy to reason about as well. If you keep your setup simple, it doesn’t have to get crazy complex.

But adhering to GitOps is an important part of this (I chose Flux), otherwise you start running one-offs kubctl apply commands and that’s when you start losing control of your cluster.

Why k3s #

I’ll discuss this in the next post, but unfortunately, the managed Kubernetes offerings are out of my target budget. In the end, I settled in a single-node k8s cluster that I would manage myself, on a baremetal machine.

The last time I did that was with the Bootstrap Kubernetes the hard way tutorial… I didn’t want to do that again 😄

Fortunately, there are a few good options to set up a k8s cluster on your own servers:

I think all of them would match my needs, but I ended up going with k3s, which can get a cluster up and running in a single command and like 10 seconds.

In my case:

curl -sfL https://get.k3s.io | sh -s - server --cluster-init --tls-san=<tailscale-ip> --node-ip=<tailscale IP> --node-external-ip=<public IP>

I also tried k3sup, which can be used to bootstrap a cluster over SSH. I didn’t see any advantage for my use case, so I didn’t use it.

The cool thing with k3s is that, to upgrade k3s and its components (including Kubernetes), I just have to run the install command again, and that’s it.

As I said, the goal is to have my whole infrastructure set up declaratively. The only manual thing I did was to run that k3s install command… I didn’t dare do an ansible role for that 😄

k8s holds all my apps. The host itself has nothing besides k3s. I only use 3 roles:

- ansible-base which does the overall setup of my hosts (base packages, sane defaults, etc.)

- ansible-ufw (private role), which basically closes all ports besides 80 and 443. I access anything private through tailscale, so no need to expose the Kubernetes API.

- ansible-restic that backs up the whole host. This is mostly for the k3s config (including the keys, etc.) and the volumes. None of the k8s-based backup solutions convinced me so far.

Storage #

For the volumes, I use local-path-provisionner since I have a single non-cloud node. I can thus leverage the raw speed on my NVMe SSDs.

It’s my default storage class:

➜ ~ kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

local-path (default) rancher.io/local-path Delete WaitForFirstConsumer false 13d

I can use it as any other storage class. The only caveat is that the size is not enforced, so no need to worry about that, well except to monitor the disk usage on the host.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: redis-data

namespace: larafeed

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

For local-path, every volume corresponds to a directory in /var/lib/rancher/k3s/storage/, which is what makes it easy to back up from the host.

root@silo ~# du -hs /var/lib/rancher/k3s/storage/* | sort -hr

77G /var/lib/rancher/k3s/storage/pvc-6ac223b9-1455-4bd8-b5b1-85640719de0f_mstdn_mstdn-cnpg-1

7.5G /var/lib/rancher/k3s/storage/pvc-d47beaeb-049e-4fe3-b9ef-70270d59a17c_kpop_kpop-cnpg-1

5.1G /var/lib/rancher/k3s/storage/pvc-0cc4e143-6563-4353-b61b-59a3e2011dec_mstdn_elasticsearch-data-mstdn-es-default-0

3.4G /var/lib/rancher/k3s/storage/pvc-f6c863e6-962c-45c3-b62f-741a585061b2_monitoring_prometheus-kube-prometheus-stack-db-prometheus-kube-prometheus-stack-0

2.3G /var/lib/rancher/k3s/storage/pvc-8714cde8-2078-4ba5-9f1f-3228e39a2bff_matomo_storage-mariadb-0

1.4G /var/lib/rancher/k3s/storage/pvc-a2123ab6-e707-4d61-a37a-215a9d17e505_wordpress_wordpress

932M /var/lib/rancher/k3s/storage/pvc-1137379c-9451-4449-9e86-0f128e3d6cc5_umami_umami-cnpg-1

799M /var/lib/rancher/k3s/storage/pvc-32ca3dca-b796-4a3d-aa79-368a2f5a2384_miniflux_miniflux-cnpg-1

669M /var/lib/rancher/k3s/storage/pvc-40bfffa9-643e-4b46-b3e2-3f513c2a00a8_kpop_elasticsearch-data-kpop-es-default-0

649M /var/lib/rancher/k3s/storage/pvc-da209fe1-52e0-460f-a794-10a399c0c9eb_larafeed_larafeed-cnpg-1

599M /var/lib/rancher/k3s/storage/pvc-5a4b35d9-7301-40b9-bea4-b0cd6c95564b_mastodon_mastodon-cnpg-1

375M /var/lib/rancher/k3s/storage/pvc-9ed6fe91-1b02-4df9-b38b-1d80179b5b97_wordpress_storage-mariadb-0

173M /var/lib/rancher/k3s/storage/pvc-6c11ab34-8045-420d-b427-61b51bf46912_mstdn_redis-data

111M /var/lib/rancher/k3s/storage/pvc-dc293d08-afe5-4990-8638-63534328efe1_matomo_matomo

40M /var/lib/rancher/k3s/storage/pvc-f212f36c-7bc4-4c5e-8219-ff6e9f7d8485_kpop_redis-data

7.9M /var/lib/rancher/k3s/storage/pvc-4920f8bc-fa29-47e6-8a40-0888c09a0fb3_mastodon_elasticsearch-data-mastodon-es-default-0

4.5M /var/lib/rancher/k3s/storage/pvc-e0f11f2a-ea6d-468d-b7e2-a00edf735b67_shaarli_shaarli-cache

3.8M /var/lib/rancher/k3s/storage/pvc-4ec6d292-a4c9-454a-ac7e-a53a6e7cd660_shaarli_shaarli-data

2.8M /var/lib/rancher/k3s/storage/pvc-96aaf5dd-8481-4d6a-8222-876dc5645427_mastodon_redis-data

512K /var/lib/rancher/k3s/storage/pvc-3ef11da4-7615-4ace-8966-b34e23c1942d_isso_isso-db

16K /var/lib/rancher/k3s/storage/pvc-99b7ae97-7bcc-42a0-8222-a799d33c5d23_larafeed_redis-data

I also experimented with Longhorn, especially for the native backup to S3 feature, but to be honest, it was overkill for me.

For the backups, I tried Velero and k8up but I’m not very confident with them.

My backup strategy with restic works pretty well. I can restore specific files in specific volumes if needed, or the entire cluster.

Also, migrating servers is very easy: I just need to set up the new server, rsync /etc/rancher and /var/lib/rancher, install k3s, update the DNS records, and done! That’s also what I was trying to achieve, moving back to Docker: easy migration between hosts.

Ingress controllers #

k3s includes traefik via a Helm chart, which is nice as you then have an ingress controller out of the box. I’m a bit torn by this though. Right now the helm chart still installs v2, even though v3 was released almost a year ago. There’s a way to replace the bundled Traefik by another ingress controller, but this is fine for me as v2 works perfectly.

That also means I’m leaving Caddy behind 💔. I switched to Caddy I think 7 years ago, and I haven’t touched a single Let’s Encrypt certificate since. I do love Nginx, but Caddy really made everything simpler.

There’s a WIP ingress controller for Caddy, but I don’t see any reason to use it, as traefik has been one of the standards in k8s for a while now, and is rock-solid.

Certificate management in k8s is very easy. Traefik has the same built-in ACME client as Caddy to generate certificates. However, if you have multiple nodes running, you need to orchestrate traefik to not request the certificate multiple times, and share it between nodes. For this reason, using the cert-manager controller is usually the way to go.

Through custom resource definitions, the ingress controller will be able to create a certificate request and the controller will then contact the issuer (Let’s Encrypt) to create a certificate, and store it in a secret. Like with Caddy, I use the Cloudflare API, where my domains are hosted, so that the controller can use the DNS challenge to create certificates, which is much more robust and flexible than the HTTP challenge.

Here is how my setup looks like:

infrastructure/configs/cluster-issuers.yaml

---

apiVersion: v1

kind: Secret

metadata:

name: cloudflare-api-token-secret

namespace: cert-manager

type: Opaque

stringData:

api-token: xxx

---

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: letsencrypt-prod-dns-cf

spec:

acme:

server: https://acme-v02.api.letsencrypt.org/directory

email: [email protected]

privateKeySecretRef:

name: letsencrypt

solvers:

- dns01:

cloudflare:

apiTokenSecretRef:

name: cloudflare-api-token-secret

key: api-token

apps/mstdn/web/ingress.yaml

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: mastodon-web

namespace: mstdn

annotations:

cert-manager.io/cluster-issuer: "letsencrypt-prod-dns-cf"

spec:

tls:

- hosts:

- &host mstdn.io

secretName: mstdn-tls

rules:

- host: *host

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: mastodon-web

port:

number: 3000

Not so bad :

It’s been a success so far #

I’ve been running my new setup for a few weeks now. The initial setup took some time, as I had to test run and migrate quite a few services, and write a lot of YAML. But I’ve been really happy with the result.

My baremetal machine has very little configuration, besides the firewall and the backups, as discussed earlier. Everything else is running inside Kubernetes, with 100% of it being managed by YAML inside a git repository and reconciled live by Flux.

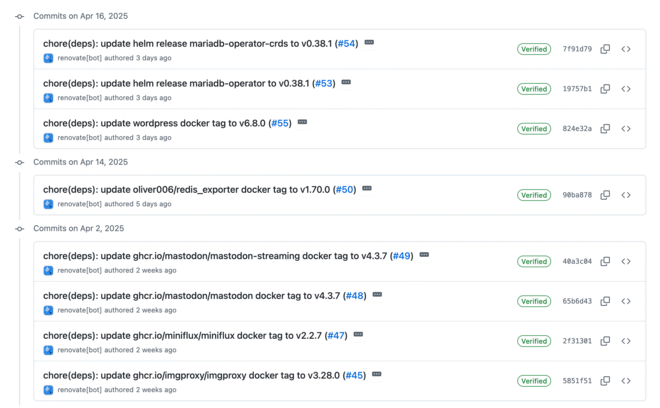

In terms of application management, it’s night and day. Some apps that required manual commands, long dependencies installations, and builds can now be updated by simply bumping a version number in the manifests. I leverage Renovate to do it for me through a Pull Request, which means I basically have one button to click! From 20 minutes down to 10 seconds… not bad!

Objective achieved 🤝.