My writing workflow with LLMs

Table of Contents

The old days: web-based editors #

I began blogging in 2014, in French using WordPress as my CMS. When I launched this blog, I chose Ghost. Both platforms offer web-based editors, allowing me to take advantage of built-in spell checking from the operating system or browser. Additionally, browser extensions like Grammarly (or Language Tool) provided more sophisticated checks for grammar and style.

Writing in Markdown #

When I switched to a static website generator a few years ago, I lost the spell checking as I needed to write markdown outside of the browser.

There are some options. For example, headless CMS solutions exist for Hugo, which means you can write content in a web-based CMS such as tina and generate the static site with Hugo. There is also the possibility of using an alternative Markdown editor with spell checking.

My VS Code setup #

I like to write in VS Code, my editor of choice since I ditched Atom and Sublime Text in 2018. Fortunately, there is a Code Spell Checker extension. It’s a basic spell checker, but it works well for typos in code and also in pure Markdown.

It doesn’t catch all typos though, and no grammatical errors.

On that note, I also use the great FrontMatter extension for VS Code which has some handy tools for Front Matter content.

Checking for syntax and grammar errors #

To catch more typos and language errors, I used to dump my markdown into the Grammarly web app. It’s not ideal but it helped me catch more errors.

At some point, I discovered a VS Code extension for Grammarly, which was pretty helpful and better than the basic spell checkers. However, Grammarly deprecated their SDK last year so this extension doesn’t work anymore. :(

Introducing GitHub Copilot into my workflow #

When I got access to the GitHub Copilot beta back in the summer of 2021, I quickly realized that it could help me write blog posts: it started finishing my sentences. This was great when it actually guessed what I had in mind.

I am, as many others, experiencing the “Copilot pause” which is the short interval between the moment I write something and I’m waiting for the LLM to suggest a completion I can tab away.

Autocompletion breaks the flow of thoughts for writing #

I accept it when coding because it’s useful most of the time, though annoying sometimes as well. When writing documentation, it’s usually useful. But when writing blog posts, I did find that it did more harm than good, as it breaks my flow: instead of having one stream of thoughts flowing through my fingers to my keyboard, this flow gets interrupted by the possibility of Copilot generating something.

I decided to disable it for my blog so that I can write more freely, in my own style.

"github.copilot.enable": {

"markdown": false,

}

Indeed, I found that a better workflow for me is to write whatever comes to mind, edit it the way I want, and then bring the LLMs to the party.

Using LLMs for proofreading #

Without completions, we can still make use of LLMs. In my case, my keyboard accuracy is pretty bad so I make a lot of typos when I write. It’s also not my native language, so I often make grammatical errors. LLMs are pretty good at helping with that!

There are multiple ways of using LLMs for proofreading.

One is to copy/paste the markdown into the LLM chat of choice and ask it to proofread it. Either by asking for a revised version or by asking to pinpoint errors. The first one is a bit dangerous as you can never be sure what the LLM changed. But when using git, it’s OK: I can paste back the LLM output and look at the diff to review and keep or not keep the changes. I usually use the “pinpoint errors” approach and edit myself.

I don’t use ChatGPT Canvas and similar products: they are pretty cool, but they require moving my content outside my editor and back, which is annoying.

The best proofreading companion: Copilot Edits #

My current preferred way is to use Copilot Edits. (This also applies to similar products such as Cursor, etc).

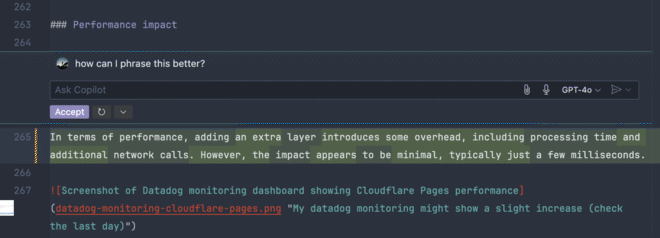

I can prompt it to proofread the content and it will generate “edits” which I can accept or reject on a one-by-one basis! It’s much better.

There are different layers to this, depending on the prompt. First, you can simply ask to proofread for typos and grammatical errors, then sentence construction. You could also use it to find a better structure.

I found that I needed multiple runs to catch everything. Also, not all LLMs will provide the same proofreading! I found that Gemini Flash 2 would do more rework than Sonnet or GPT-4o, for example. Anecdotally, I didn’t find the reasoning models to do a significantly better job. In any case, I still have my girlfriend to catch what slips through the net 😙.

When I have specific concerns about a paragraph, I can also use the inline Copilot:

I tried it on a few of my old entries and it did find some very glaring and obvious mistakes that I didn’t catch when proofreading myself! I would love to run it on the entire repo, but I would still have to review everything, even with the Agent mode.

A proofreader, at most a co-writer, not an author #

While it’s very useful for proofreading (or filling the alt descriptions of images 😄), I use it very carefully for more than that.

I would not use it to write something from scratch, as I feel it takes away the personality from my writing, at least for this blog. Perhaps less so for documentation.

The writing process has many benefits. It ensures a good understanding of the subject matter to explain it clearly. It challenges me to research and fact-check that what I’m writing is correct. And it improves communication skills which are useful in many other areas of life. Reducing it to LLM-editing would eliminate most of these benefits.

Funnily enough, I started drafting this post before reading Writing for Developers. The book has a section on Generative AI, which essentially advises to use it to enhance your writing, not to replace you as the writer. I think this approach makes sense!